TPLS: Optimised Parallel I/O and Visualisation

eCSE01-008Key Personnel

PI: Dr Prashant Valluri - The University of Edinburgh

Technical: Mr Iain Bethune and Dr Toni Collis - EPCC, The University of Edinburgh

Relevant documents

Paper presented at ParCo 2015: Developing a

scalable and flexible high-resolution DNS code for two-phase flows;

Download the

open access pre-print version

of this paper.

Project summary

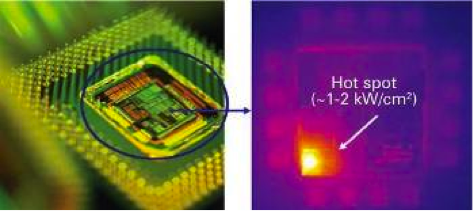

TPLS is a freely available Computational Fluid Dynamics code which solves the Navier-Stokes equations for an incompressible two-phase flow. The interface is modelled using either a level-set or a diffuse-interface method. This means that TPLS can simulate flows with complicated interfacial topology, involving a range of time- and length-scales. Such problems are common in industrial applications. Indeed, TPLS has already been used to understand the flow-pattern map in a falling-film reactor. The immediate application here was to post-combustion carbon capture, yet such reactors are ubiquitous in the chemical engineering industries. In the same way, TPLS can be used to simulate and hence control multiphase flow in the oil/gas industries, thereby contributing to flow assurance in the transport of hydrocarbons in pipelines. TPLS is also being used to simulate processes concerned with microelectronic cooling, that involve phase-change processes such as refrigerant droplet evaporation and flow boiling in microchannels.

On the scientific side, TPLS has been used to simulate the genesis and evolution of three-dimensional waves in two-phase laminar flows. In these investigations, TPLS has revealed how three-dimensional waves form in systems where two-dimensional disturbances dominate, thereby contributing the resolution of a paradox in the scientific literature on two-phase flows. TPLS has also revealed in good detail flow features responsible for operational limits of distillation/ reaction/ absorption columns used widely in the Chemical industry. Furthermore, TPLS has helped explain the nature of thermocapillary instabilities that can alter the efficiency of refrigerants and other heat transfer fluids.

Computational Achievements

By moving from a simple master-gather I/O algorithm to using parallel-NetCDF for all of the file outputs and check-pointing, the code now scales to over 3000 cores with excellent strong scaling up to 1536 cores, including a speedup of 26x and an efficiency of 72% (comparing with the same simulation on one node (24 cores) and 36 nodes (1536 cores)).

Previously, using the ASCII format for the output data, a job on a grid of the size used above would generate 1.5GB per output operation (i.e. writing (x,y,z) coordinates, (u,v,w) velocity vectors and the phi levelset function every 1,000 iterations). Using the parallel I/O and the NetCDF format, the code generates 225 MB per output operation, which represents a compression factor of 6.67. This gives us an opportunity to access significantly greater Reynolds numbers and physics such as phase-change (which require more grid points) for the pre-eCSE level output size.

By reducing by a factor of over 6 the space required to store these output files, we decrease the probability of needing to minimise data retention.

The code has been refactored to enable future development work to be significantly quicker and easier to debug, as well as providing more runtime options, moving away from extensive use of hard-coded parameters.

Financial Benefits

Before the current eCSE work, the maximum speedup was sub-linear (exponent = 0.6) for large jobs until 1024 cores. This was purely due to serial I/O.

After the eCSE work which involved implementation of parallel I/O, we have recorded a super-linear speedup (exponent = 1.2) until at least 576 cores and a linear speedup until 1152 cores. This is a substantial financial saving for us which enables us to run significantly larger jobs for much less AU consumption than before. As an example, for a typical run (1200 tasks, 22 M grid points) we estimate AU savings of around 30% (on what was used before this eCSE work). This means that we can now afford to access runs with more complex physics than ever before, accelerating the ongoing research work.

Over the next year, we expect to spend 25 MAU on TPLS and related calculations, at a cost of £14,000. This has a cumulative effect on the usage of staff-time (post-doc/PhD student). Whereas earlier a typical droplet evaporation simulation would have taken at least 2 months of work (from set-up to complete analysis), now we estimate that this would take just over a month's work - which would mean just under a 50% time-saving.

Scientific Benefits

This improvement will not only reduce the resource requirement needed for current simulations, but will also enable simulations on a much larger scale than was previously possible - both in terms of spatial extent, and resolution. Indeed, the improvements in the code's performance and scalability mean that TPLS edges closer to being able to simulate fully turbulent flow regimes. These are precisely the flow regimes of interest in the oil and gas industries. Accurate modelling and simulation of flow regimes and the stability of such regimes is crucial to flow assurance, and TPLS will be able to make a contribution here.

Also of great interest to the microelectronics industry is the accurate quantification of cooling needed which is essential to increase the lifetimes of faster processors. This quantification can be estimated by rigorous TPLS calculations, now possible after the optimisation done under the eCSE programme. Furthermore, post-eCSE project, we are now able to study phase change under both earthly and microgravity systems. This level of ability is unprecedented for complex gas-liquid systems which simultaneously undergo phase change (until now, estimates were only based on purely empirical calculations). The current simulation results will be discussed directly with companies like Selex Galileo and Thermacore who develop cooling systems for space and ground applications.