Scalable and interoperable I/O for Fluidity

eCSE01-009Key Personnel

PI/Co-I: Dr Gerard Gorman - Imperial College, London; Dr Jon Hill - Imperial College, London

Technical: Dr Michael Lange - Imperial College, London

Relevant Documents

eCSE Final Report: Scalable and interoperable I/O for Fluidity

Unstructured Overlapping Mesh Distribution in Parallel - M. Knepley, M. Lange, G. Gorman, 2015. Submitted to ACM Transactions on Mathematical Software.

Flexible, Scalable Mesh and Data Management using PETSc DMPlex; M. Lange, M. Knepley, G. Gorman, 2015. Published in EASC2015 conference proceedings.

Project summary

Scalable file I/O and efficient domain topology management present important challenges for many scientific applications if they are to fully utilise future exascale computing resources. Designing a scientific software stack to meet next-generation simulation demands, not only requires scalable and efficient algorithms to perform data I/O and mesh management at scale, but also an abstraction layer that allows a wide variety of application codes to utilise them and thus promotes code reuse and interoperability. PETSc provides such an abstraction of mesh topology in the form of the DMPlex data management API.

During the course of this project PETSc's DMPlexDistribute API has been optimised and extended to provide scalable generation of arbitrary sized domain overlaps, as well as efficient mesh and data distribution, contributing directly to improving the flexible and scalable domain data management capabilities of the library. The ability to perform parallel load balancing and re-distribution of already parallel meshes was added, which enables further I/O and mesh management optimisations in the future. Moreover, additional mesh input formats have been added to DMPlex, including a binary Gmsh and a Fluent-CAS file reader, which improves the interoperability of DMPlex and all its dependent user codes. A key aspect of this work was to maximise impact by adding features and applying optimisations at a library level in PETSc, resulting in benefits for a several application codes.

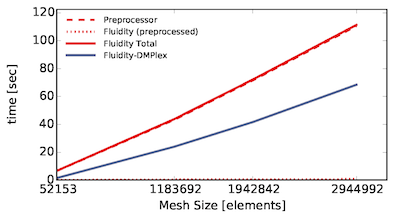

The main focus of this project, however, was the integration of DMPlex into the Fluidity mesh initialisation routines. The new Fluidity version utilises DMPlex to perform on-the-fly domain decomposition to significantly improve simulation start-up performance by eliminating a costly I/O-bound pre-processing step and improved data migration (see Illustration 1).

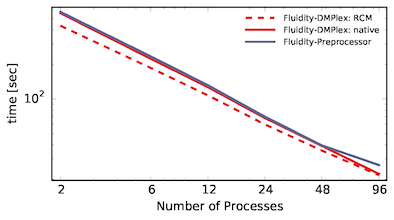

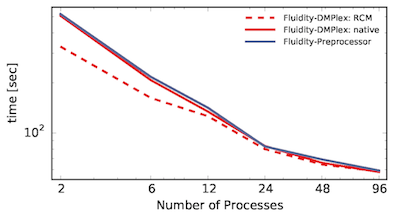

Moreover additional new mesh input formats have been added to the model via new reader routines available in the public DMPlex API. Due to the resulting close integration with DMPlex, mesh renumbering capabilities, such as the Reverse Cuthill-McKee (RCM) algorithm provided by DMPlex, can now be leveraged to improve the cache coherency of Fluidity simulations. The performance benefits of RCM mesh renumbering for velocity assembly and pressure solve are show in Illustration 2 and 3.

Summary of the software

Most of the relevant DMPlex additions and optimisations are available in the current master branch of the PETSc development version, as well as the latest release version 3.6. A access to a central developer package for tracking petsc-master on Archer has been provided by the support team and is being maintained by the lead developer until the required features and fixes are available in the latest cray-petsc packages.

The current implementation of the DMPlex-based version of the Fluidity CFD code is available through a public feature branch.

Efforts in providing the full set of Fluidity features through DMPlex are ongoing and integration of this new feature into the next Fluidity release is actively being prepared.