Enabling high-performance oceanographic and cryospheric computation on ARCHER via new adjoint modelling tools

eCSE03-09Key Personnel

PI/Co-I: Dr Dan Jones - British Antarctic Survey, Dr Dan Goldberg - University of Edinburgh, Dr Paul Holland - British Antarctic Survey, Dr David Ferreira - University of Reading

Technical: Dr Gavin Pringle - University of Edinburgh, Dr Sudipta Goswami - British Antarctic Survey

Relevant documents

Publication arising from the work done: Goldberg, D., Narayanan, S. H. K., Hascoet, L., & Utke, J. (2016), An optimized treatment for algorithmic differentiation of an important glaciological fixed-point problem, Geoscientific Model Development Discussions, 1-24.

Project description

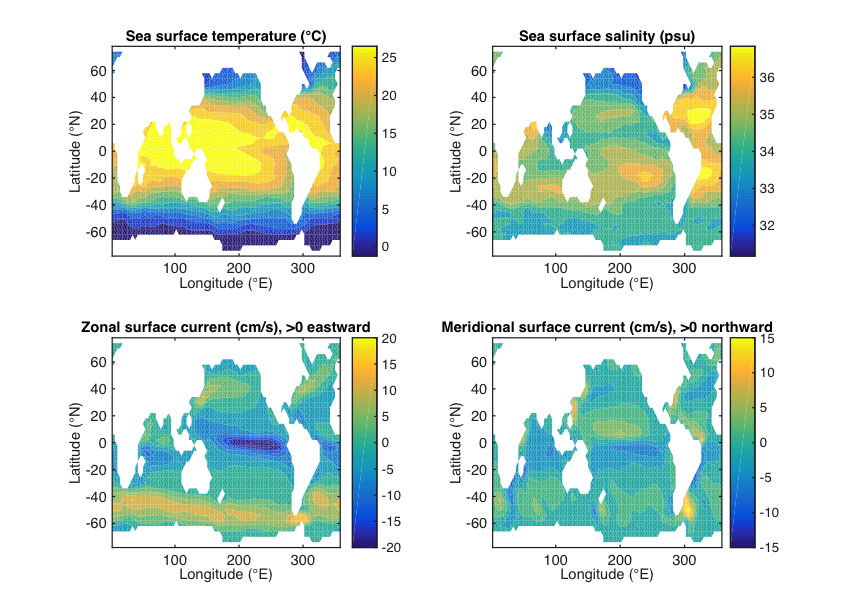

The study of the ocean and cryosphere is crucially dependent on computationally-intensive, large-scale numerical models that must be run on HPC systems. In the "forward" configuration, these models solve time-dependent boundary value problems and initial value problems with an enormous number of variables (e.g. temperature, salinity, and velocity at every grid cell) and a variety of complex physical parameterisations (e.g. small-scale mixing). "Adjoint" models, which can be derived from forward models, offer a unique, efficient method for calculating sensitivities (e.g. the sensitivity of the Gulf Stream to patterns of ice sheet melting), and are essential for constructing observationally-constrained estimates of the state of the ocean (for an example, see the SOSE project: http://sose.ucsd.edu/).

In recent years, adjoint methods have been used to gain unique insights into the ocean and cryosphere. However, the use of adjoint models on large-scale problems suitable for high-performance computing remains relatively limited due to poor access to efficient, cost-effective adjoint modelling tools. Although adjoint models are computationally expensive, requiring at least five times more CPU hours than their forward versions, adjoint sensitivity calculations are much more efficient than the alternative method of evaluating perturbed forward models. Furthermore, adjoint models remain the only practical means by which to construct observationally-constrained estimates of the state of the ocean (i.e. state estimates) in an internally-consistent way that also satisfies the model equations and boundary conditions. This project aimed to improve the UK's adjoint modelling skills by making adjoint methods more efficient and accessible on ARCHER.

The project brought together ARCHER specialists, a model manager at the British Antarctic Survey, and scientific programmers at Argonne National Lab and the Massachusetts Institute of Technology (MIT) in order to develop and optimise the forward and adjoint model test suites.

Achievement of objectives

This project aimed to bolster the ARCHER community's access to oceanic and cryospheric adjoint modelling by developing, optimising, and documenting a forward model test suite and an adjoint model test suite that could be used as the foundation for future numerical sensitivity experiments and state/parameter estimates, complementing the existing MITgcm verification experiments that come with the source code. While the verification suite is designed to be run quickly and on any platform as a simple code regression check, the suite developed in this project is specifically designed to test and exploit ARCHER's large-scale parallel computational environment, and focuses on adjoint applications. Since both ocean and ice models must rapidly and repeatedly solve large linear systems, part of this work involved optimising these linear solvers using an external library (PETSc) - this improves forward and adjoint computation equally.

The following objectives were achieved:

- Ported four MITgcm ocean model test cases to ARCHER (two forward, two adjoint using OpenAD).

- Created a build options file specifically for use on ARCHER. This new build options file has been checked into the MITgcm repository and is tested daily.

- Implemented DIVA (divided adjoint; allows for restart of adjoint simulations) in OpenAD.

- Developed an optimised treatment for algorithmic differentiation of an important glaciological fixed-point problem.

Summary of the Software

General circulation model (MITgcm)

The MITgcm is a flexible, open-source general circulation model that is widely used by the global scientific community (including many in the ARCHER community) to study the ocean, atmosphere, cryosphere, and climate. It has been run on numerous HPC platforms (e.g. ARCHER, HECToR, NASA Columbia, NASA Pleiades, NSF Yellowstone) in a wide variety of single-core and multi-core configurations (300-1000 core applications are common). Over 100 research articles published in 2013 involved MITgcm in some way. MITgcm is also highly modular, featuring a wide variety of "packages" that can be activated or de-activated depending on the experimental design. For instance, sea ice, biogeochemistry, and/or sub-grid scale mixing can be included if needed.

Algorithmic differentiation tools

One of the most useful features of MITgcm is its adjoint modelling capability. The main code has been written in parallel with two algorithmic differentiation (AD) tools (i.e. TAF and OpenAD) that take large, frequently-updated numerical code as input and produce "differentiated" code as output. The differentiated code can be used for sensitivity analysis, optimisation, and state estimation. It is worth noting that, as TAF and OpenAD produce compilable code, the adjoint model inherits many of the properties of the forward model, such as domain decomposition, multithreading, and choice of linear solvers. Thus, gains from optimising forward model performance will directly improve adjoint model performance as well. TAF is a well-developed commercial package that produces very efficient code but is expensive to use, while OpenAD is a newer package that is less efficient but is open-source and free to use.

Linear solvers within MITgcm

The ocean and ice-sheet codes involve the repeated solution of large-scale linear systems, accounting for between 25% and 80% of forward model computation. Since the self-adjoint property of these linear systems is exploited by the adjoint model, linear solves affect both forward and adjoint model performance in a similar fashion. Thus it is crucial to optimise their performance.

PETSc is a leading open-source software package for the efficient parallel solution of large linear systems. An MITgcm interface has been written (for the ice model only) to the PETSc library, and some investigation of preconditioner and solver suitability has been carried out. However, the investigation was far from exhaustive, and MITgcm-PETSc interfacing has not yet been tested on ARCHER. Furthermore, no such interface has been written for the ocean model, but its development is anticipated to be straightforward. Once implemented, the performance of various PETSc preconditioners and solvers in the PETSc library will be compared in the context of the test suite.