Full parallelism of optimal flow control calculations with industrial applications

eCSE03-12Key Personnel

PI/Co-I: Xuerui Mao, Georgios Theodoropoulos, Stephen McGough - Durham University

Technical: Bofu Wang - Durham University

Relevant documents

eCSE Technical Report: Full parallelism of calculations of optimal flow control

Project summary

SEMTEX is an open source quadrilateral spectral element Direct Numerical Simulation (DNS) code that uses standard nodal Gauss-Lobatto-Legendre (GLL) basis functions and (optionally) Fourier expansions in a homogeneous direction to provide three-dimensional solutions for the Navier-Stokes (NS) equations.

SEMTEX, and the code developed on top of it, enable DNS, stability analysis and flow control for 2D and 3D flow in one homogeneous direction. Applications include the study of the reduction of turbulent friction drag and suppression of vortex induced vibrations. Prior to this project, SEMTEX was limited to the study of small to moderate-scale problems, due to its limited parallel implementation: with parallel computation only enabled in one homogeneous direction, scalability was limited for more than a few hundred processors.

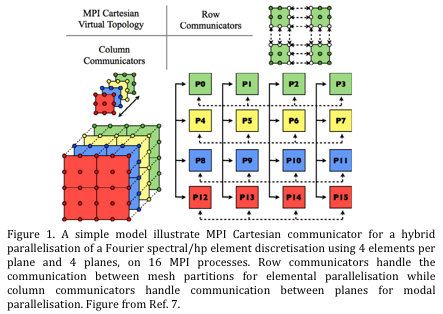

This project aimed to parallelize a 2D problem and improve the scalability. The fully parallelized code first decomposes a 3D problem to multiple 2D problems, as with the previous implementation, and then each 2D problem is split across multiple cores. Finally, the 2D parallelism of elements in each plane combines Fourier parallelism in a homogeneous direction to achieve a fully 3D parallelisation.

There is a growing research community making use of this code both within the UK and internationally. The applications range from fluid physics - e.g. stability, dynamics of non-Newtonian flow and bioflow, non-normality and receptivity - to industrial design - e.g, wind turbines, Formula one cars and deep-ocean oil risers. We anticipate that the successful further parallelisation of the code and delivery of a scalable solution will be the catalyst for the exploitation of the software by an ever larger community of researchers and users across the globe, rendering ARCHER a focal point of interest in the domain. The flow control techniques and knowledge developed from this project will also benefit the oil industry (design of oil risers with reduced vortex induced vibrations), aviation (drag reduction to reduce CO2 emission) and wind energy industry (advanced wake flow model and optimal layout of turbines in offshore wind farms).

Achievement of objectives

The key objective of this project was to fully parallelize the well-tested SEMTEX code. The 2D parallelism as well as full 3D parallelism have been successfully implemented. Performance and scalability improvements have been achieved, with very large problems, such as flow around a cylinder at Reynolds numbers greater than one million, having been run successfully using the fully parallelized 3D DNS.

The overall improvement of the performance of the code will accelerate research in developing disruptive technologies in flow control, which has been recognized as the leading edge of fluid dynamics. Several world leading groups are investigating flow control techniques, e.g. fluid groups in the Department of Mathematics and Department of Aeronautics in Imperial College London, LadHyX in Ecole Polytechnique, and the Linne Flow Centre in KTH Royal Institute of Technology, all of whom have either previously collaborated with, or have shown interest in, the optimal flow control work conducted by the PI. Therefore the project offers the opportunity to strengthen the international leading position of the UK in the rapidly developing area of laminar/turbulent flow control, e.g. reduction of turbulent friction drag and supression of vortex induced vibrations, which are both areas of research in which there is currently much interest.

The improvement not only reduces the resource requirement needed for current simulations, but also enables simulations on a much larger scale than was previously possible - both in terms of spatial extent, and resolution. The improvements in the code's performance and scalability will enable it to be used for fully simulating turbulent flow, in the sense of both direct numerical simulation and large eddy simulation. Problems such as laminar-turbulent transition in boundary layers as well as turbulent wake interaction in large-scale wind farms are of interest in our recent research. Accurate modelling and simulation of these flows are crucial to understanding transition mechanisms and applying flow control in real applications, and SEMTEX will be able to make a contribution here.

Summary of the Software

SEMTEX has been compiled and run on a variety of HPC platforms. The code is mainly written in C and C++ and linked with standard libraries, exposed through Fortran interfaces, such as BLAS and LAPACK, and uses MPI as the message-passing kernel and binary formats for input and output. The code is relatively small (less than 10MB) and easy to modify. The calculation speed is also very fast when the problem is small and the poorly-conditioned pressure Poisson equation is solved directly (via band-optimised Cholesky decomposition) rather than iteratively. As a minimum, the C++, C and F77 (or later FORTRAN) compilers, the Gnu version of make, yacc (or bison), as well as BLAS and LAPACK libraries and their associated header files are needed. For parallel compilation the MPI libraries and headers are need. Most of these components are readily available on modern Unix/Linux/Mac systems.

The source code is currently available at : http://users.monash.edu.au/~bburn/semtex.html

The new functionality will be incorporated into a future SEMTEX release and made available to the entire user community.