A fast coupling interface for a high-order acoustic perturbation equations solver with finite volume CFD codes to predict complex jet noise

eCSE12-20Key Personnel

PI/Co-I: Dr H. Xia; Prof G. Page

Technical: Dr M. Angelino; Mr M. Moratilla-Vega

Relevant Documents

eCSE Technical Report: A fast coupling interface for a high-order acoustic perturbation equations solver with finite volume CFD codes to predict complex jet noise

Project summary

In this project, our aim was to develop a fast interface for a high-order acoustic perturbation equations solver, APESolver, to couple with finite volume Navier-Stokes equations codes that are widely used in the UK Turbulence, Applied Aerodynamics and ARCHER communities.

Driven by more stringent design requirements, the demand for multi-physics modelling capabilities is growing fast. Well-established modelling tools for individual disciplines are in need of running simultaneously while exchanging coupling data efficiently.

APESolver, as part of the Nektar++ software framework (www.nektar.info), is an open-source p-order polynomial spectral element code, which solves the linear Acoustic Perturbation Equations (APE) to obtain flow-induced acoustic fields in time and space. It is ideal for capturing sound wave dilatation and scattering around complex geometry with high accuracy, such as jet noise emitted from civil aircraft, where source terms can be determined from solutions of compressible Navier-Stokes (N-S) equations using well-established finite volume CFD codes. Since the sound source data is volumetric and time-dependent, a fast, parallel and memory efficient coupling is essential and the best way to achieve this is via MPI.

To couple with APESolver, we chose two mainstream finite volume N-S codes: HYDRA, and OpenFOAM based on their popularity and availability within the community (either proprietary or free). The N-S codes chosen also feature cell-vertex and cell-centred control volumes, allowing our coupling implementation to work with both types of connectivity as commonly used within the CFD community.

Achievement of objectives

There were four main objectives defined in the project:

-

To implement coupling functionalities between HYDRA and APESolver

The library used for the coupling was CWIPI, which was already implemented in the APESolver. The implementation of the library in HYDRA was successful. The coupling implementation was validated successfully using the existing file-based methodology applied to a cylinder vortex shedding case.

-

To implement the same functionalities as i) for OpenFOAM coupled with APESolver.

The implementation of CWIPI in OpenFOAM was successful using rhoPimpleFOAM. The same cylinder case was used for the validation of the implementation.

-

To create a library of API subroutine/functions with the implementations in i) and ii) to form a wrapper layer.

An API wrapper library has been created to contain as much as possible the implementation of coupling, in Fortran (using HYDRA's implementation as a reference), and in C++ for OpenFOAM.

-

To make the project's outcome available to the community.

The modified codes (under their original licence agreement) and the interface wrapper library are available on Github.

Summary of the software

Three different codes have been used during this project. HYDRA is the in-house code of Rolls-Royce plc and it is mainly used for compressible flows. It is an unstructured, cell-vertex, second-order finite-volume code.

The second code is OpenFOAM, which is an open-source code implemented in C++ for arbitrarily unstructured meshes. It is also based on a finite-volume second-order discretisation in which the variables are stored at the cell-centre.

The third code is the spectral/hp finite element open-source code Nektar++. The open-access library CWIPI was used for the coupling between the flow solver codes (HYDRA and OpenFOAM) and the acoustic code (APESolver).

The coupling wrapper interface developed during the project is available at a Github repository with the instructions for coupling using OpenFOAM and other codes written in Fortran with similarities, which can be found at: https://github.com/mimove?tab=repositories

Scientific Benefits

Aircraft noise is a major concern to modern societies and can have strong negative health and economic impact. The jet noise research community has made encouraging progress in recent years, thanks to advances in HPC and growing utilisation of HPC resources. Computational approaches are now seen as increasingly promising in modelling isolated simple jets, but more needs to be done to understand installed jets, where jet streams interact with aircraft wing, flaps and pylon, causing sound waves to reflect and scatter. All these efforts are necessary to help predict and potentially reduce noise.

This eCSE12-20 project is specifically targeting a computational issue that hinders more efficient modelling of the propagation of sound waves leaving the turbulent jet stream. It makes use of the latest high-end parallel computing techniques to ensure that massive amounts of data are exchanged in a way that is as parallel as possible. This removes the bottleneck between turbulent flow simulation and acoustic propagation.

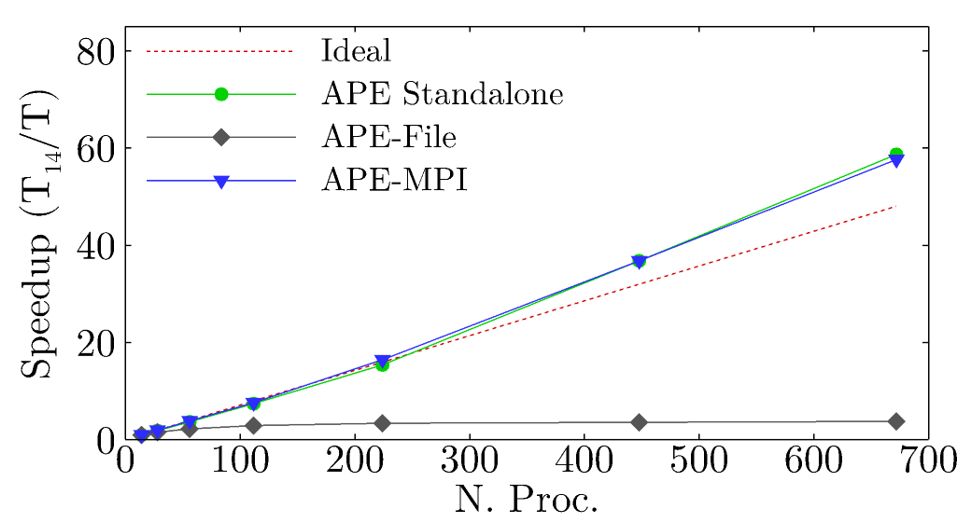

As a result, performances of more than 10 times faster have been observed for smaller sized problems, and more than 100 times faster for larger problems. This gives the research community a new tool with which to model and predict the propagated far-field noise emitted from turbulent jets much more efficiently.

The open-source implementation of a coupling approach in fully parallel on the fly is also valuable to the ARCHER community. The software engineering outcomes of the project will benefit other research fields that potentially rely on high-end computing to tackle multi-physics problems.

The new parallel interface has improved the overall computational efficiency by a factor of 10 for small cases. For larger cases, a factor of more than 100 (eg for realistic configurations) compared to the existing file-based coupling approach can be achieved.

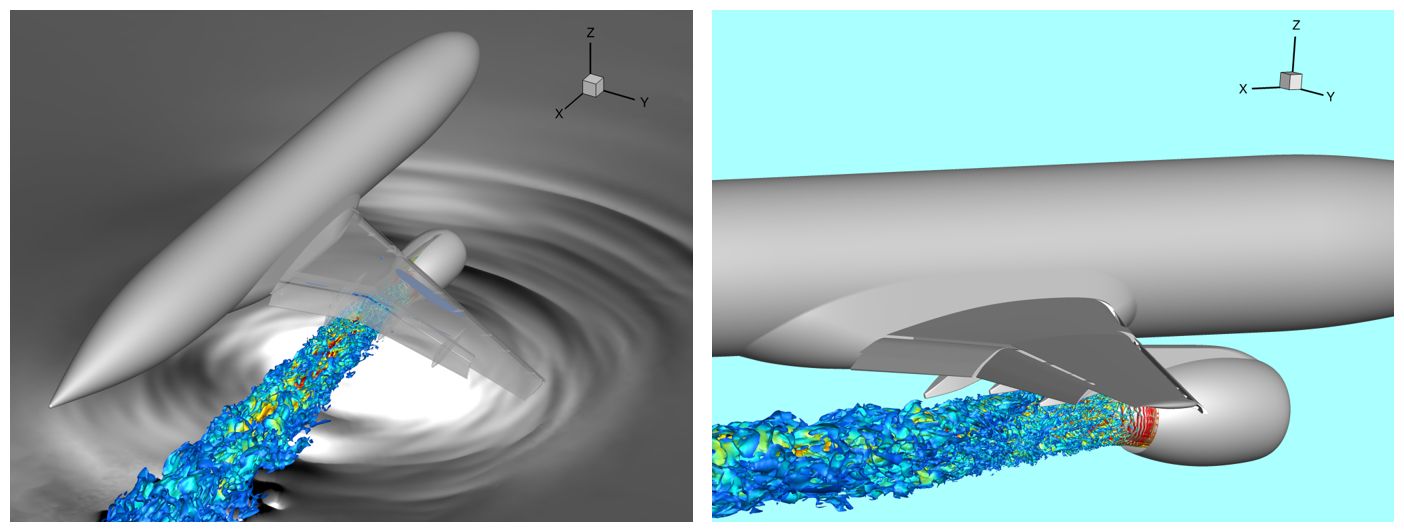

Figure 1: Jet wing/flap/pylon interaction.

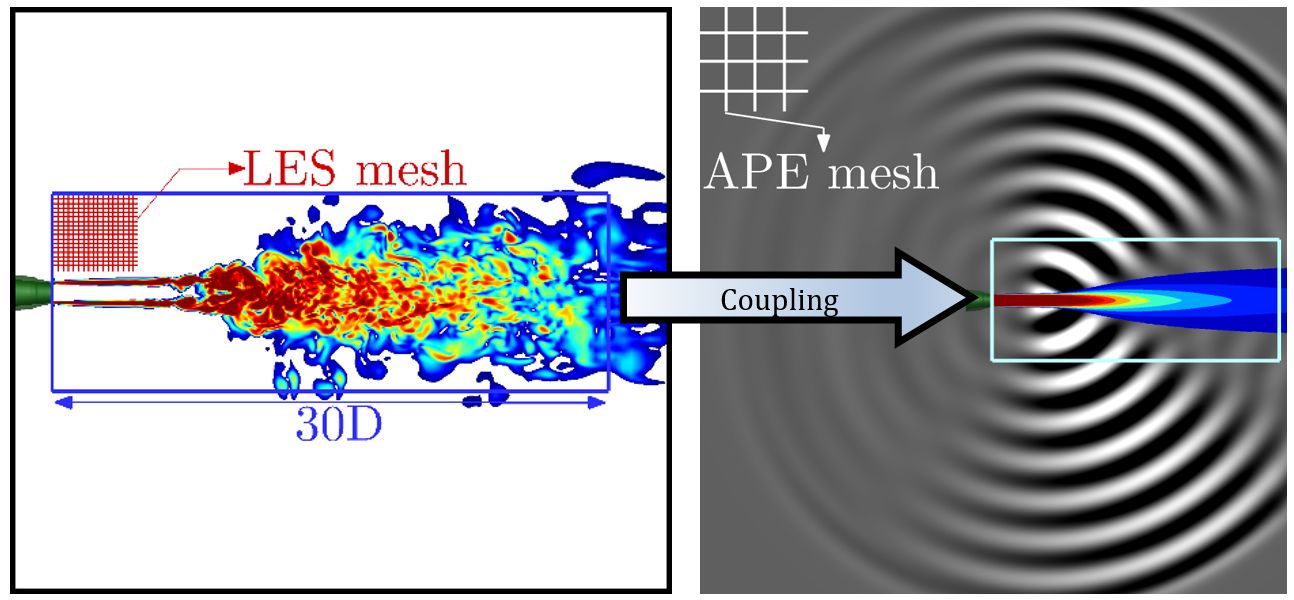

Figure 2: Coupling APE with captured noise sources

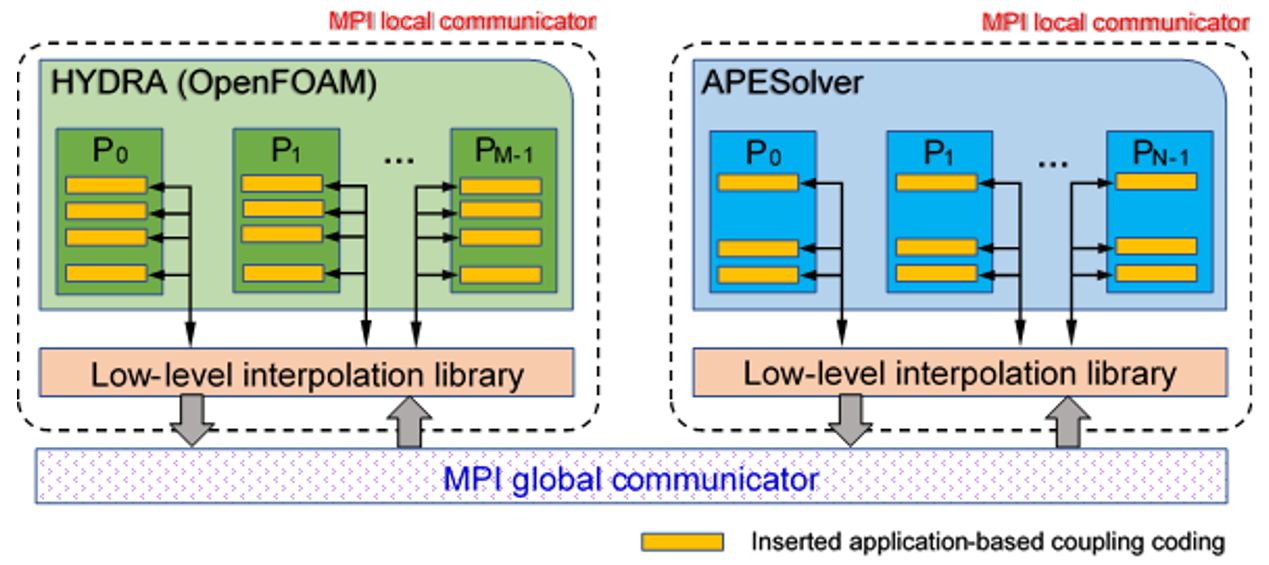

Figure 3: Diagram of coupling procedure with extensive modification to source codes

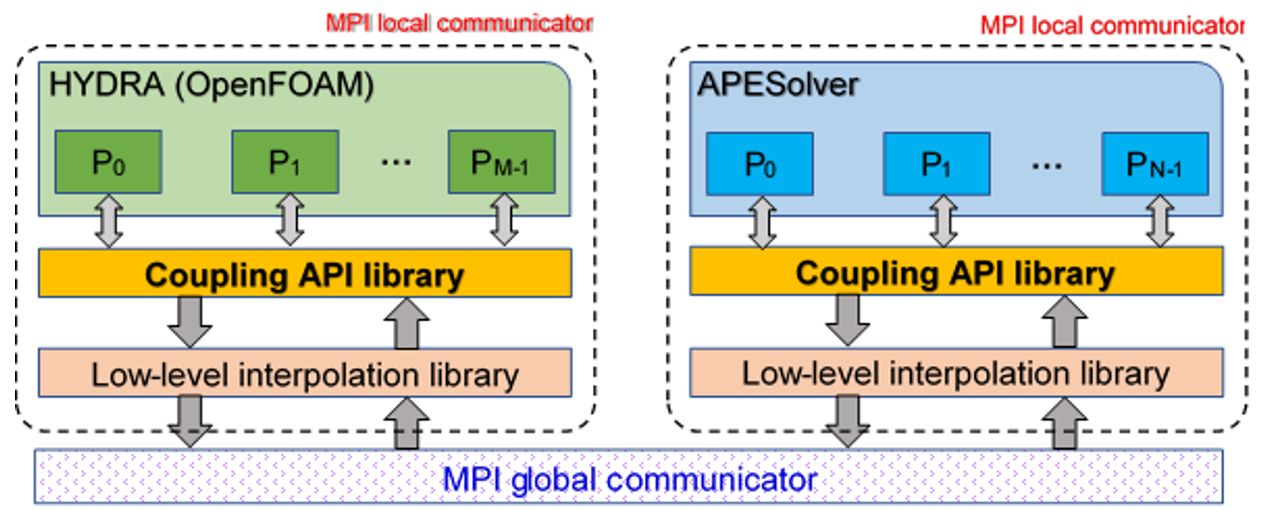

Figure 4: Diagram of coupling procedure with the common API library interface

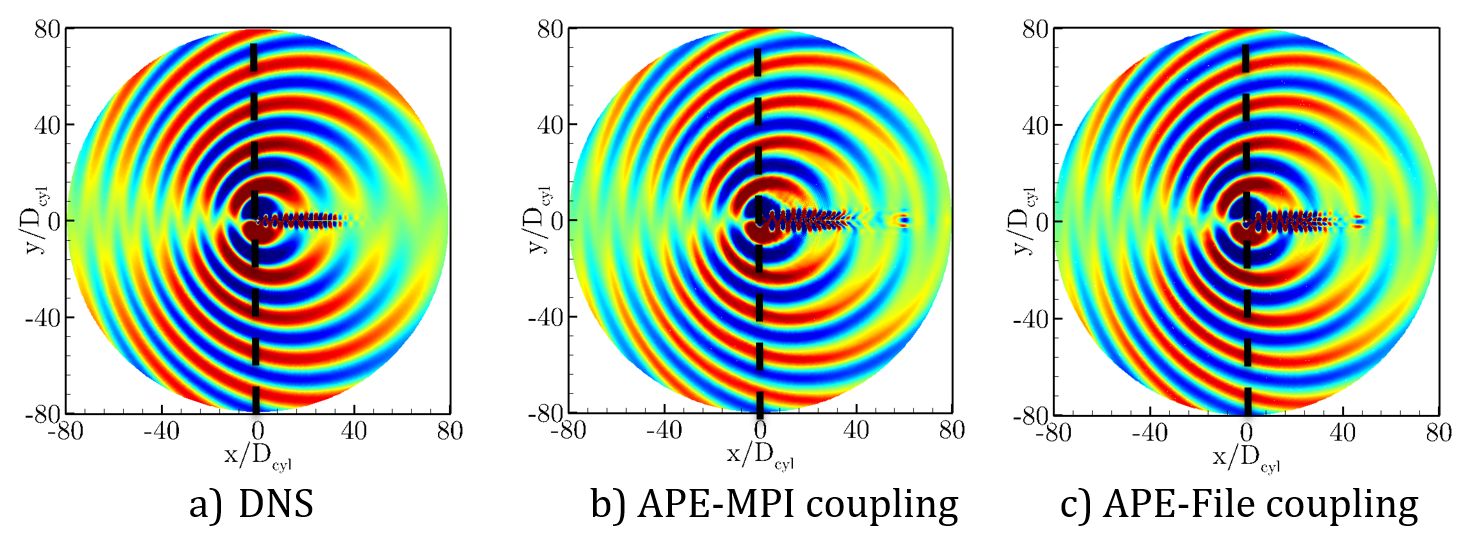

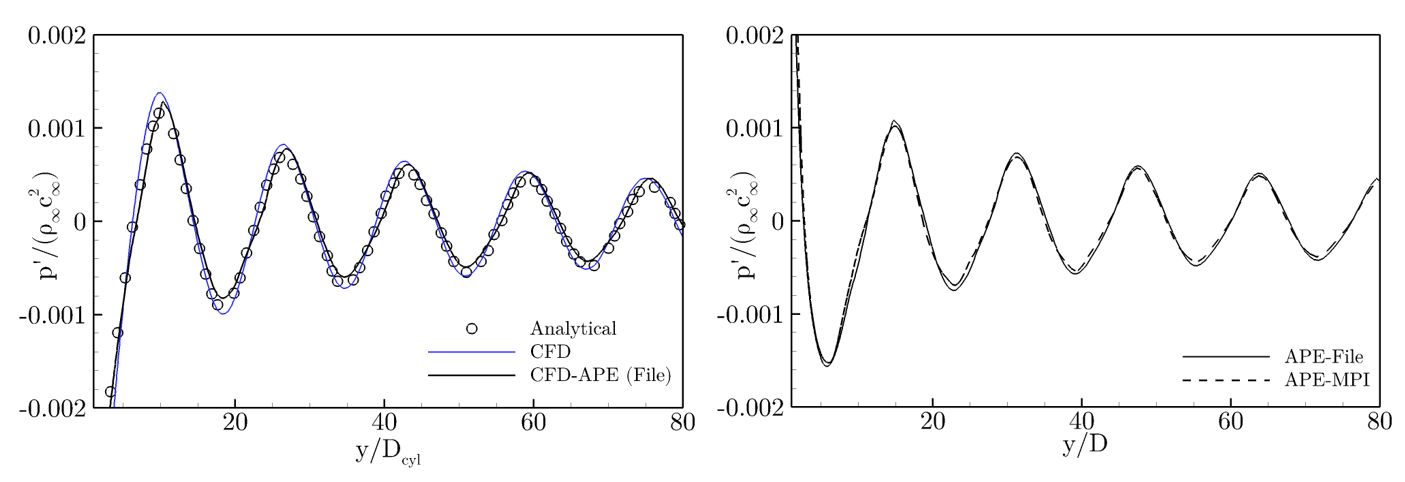

Figure 5: Acoustic field for the CFD and CFD/APE simulations

Figure 6: Coupling APE with captured noise sources

Figure 7: Coupling APE with captured noise sources

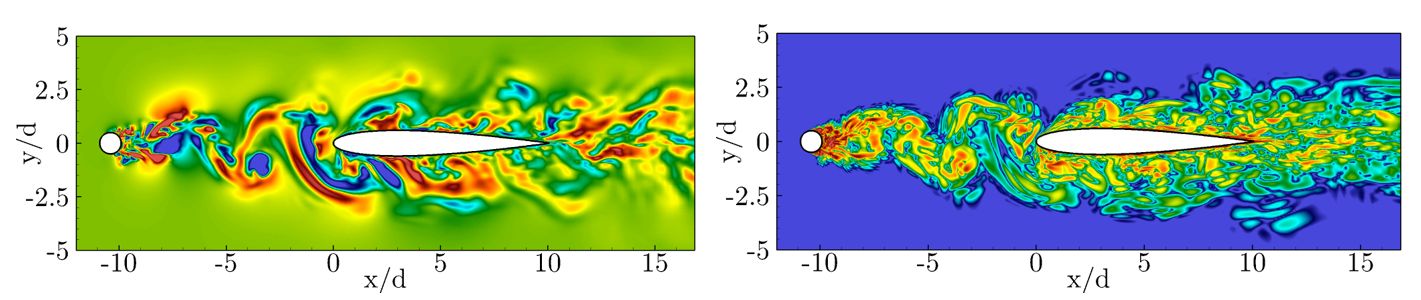

Figure 8: Contours of instantaneous spanwise vorticity and velocity fields obtained with HYDRA.

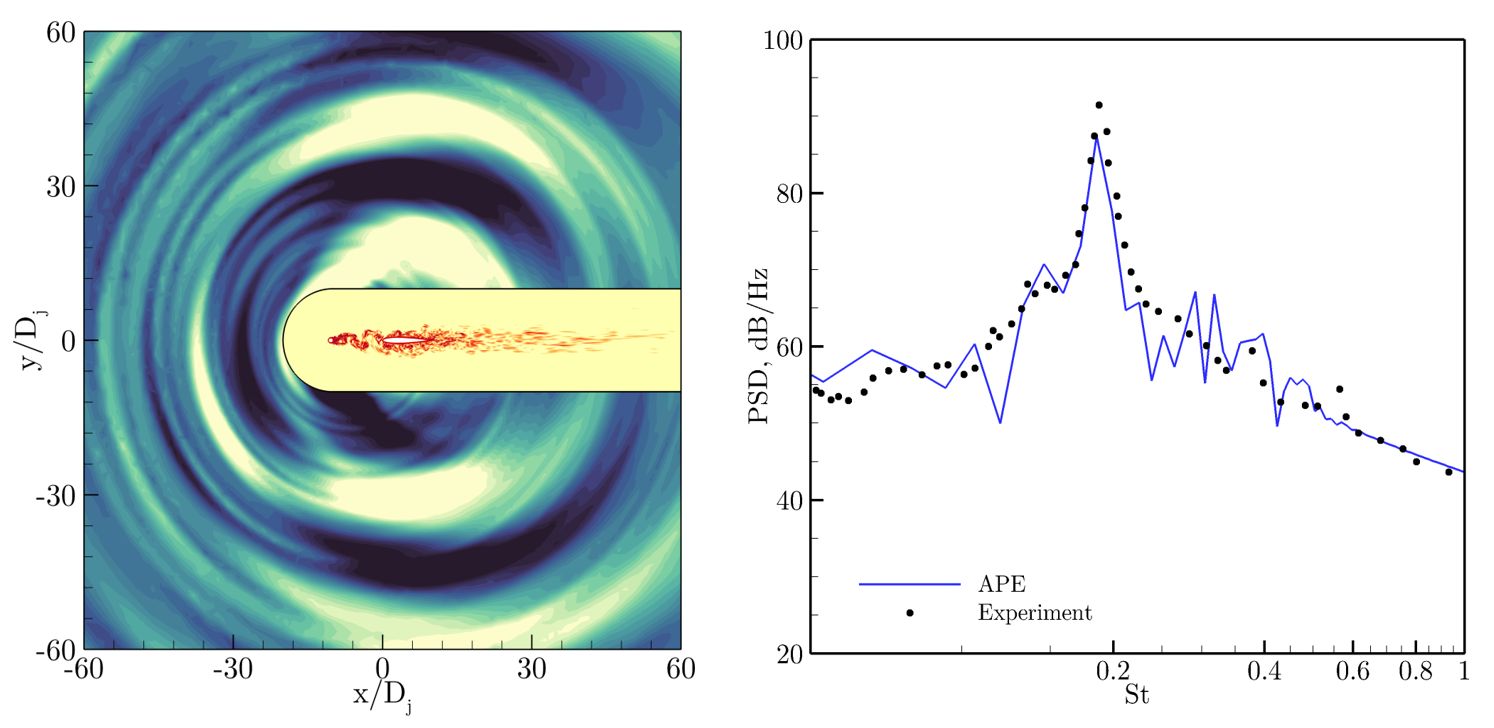

Figure 9: Contours of pressure perturbation (left) and power spectral density (right). Results obtained with HYDRA-Nektar++ coupled implementation.

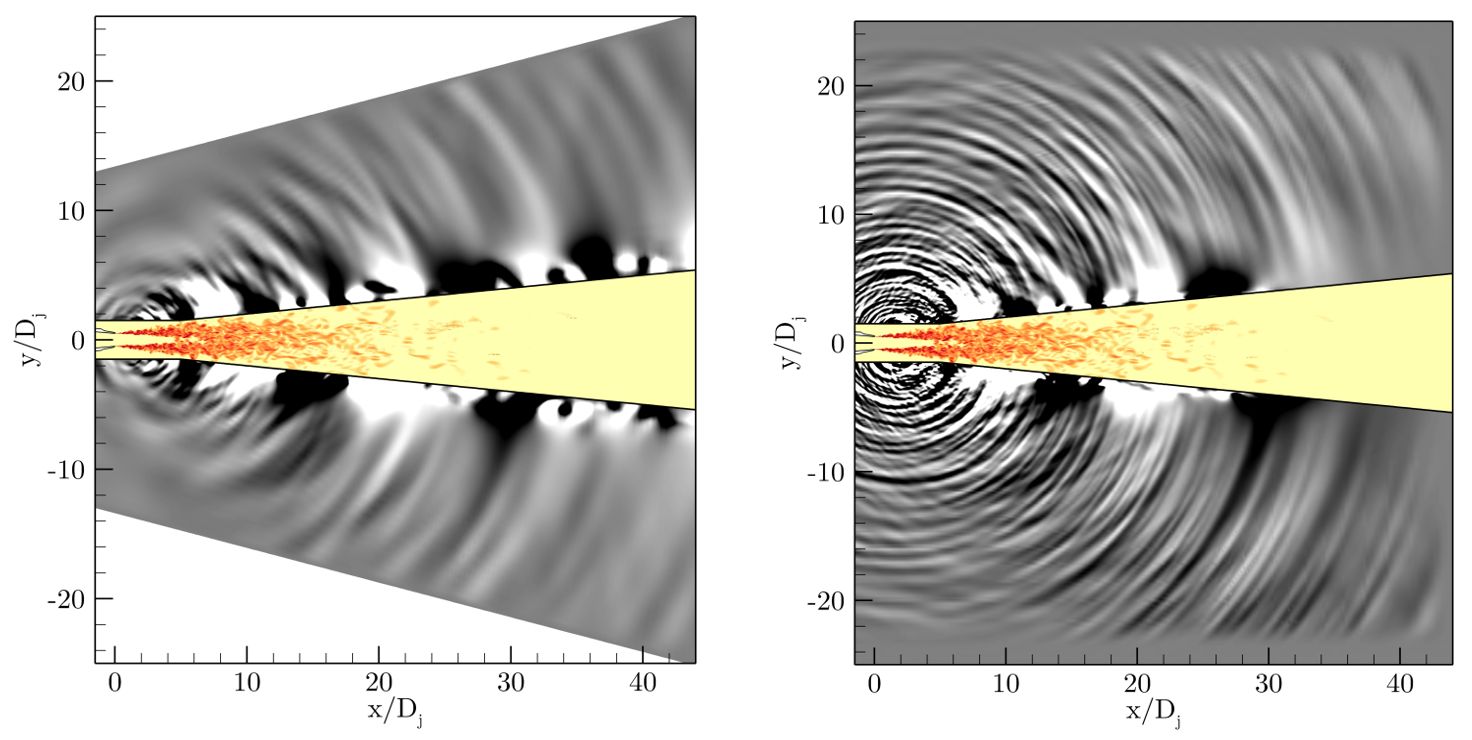

Figure 10: Acoustic field for the standalone OpenFOAM and the coupled OpenFOAM/Nektar++ cases.